Introduction

Microsoft continues to push the boundaries of artificial intelligence with the introduction of its new Microsoft Mu AI model. Announced alongside the latest Windows 11 Copilot+ updates, Mu powers intelligent AI agents within Windows Settings, allowing users to interact naturally and efficiently with their operating system. This breakthrough represents a major leap forward for on-device AI models, offering unprecedented speed, efficiency, and privacy.

What Is the Mu AI Model?

According to Microsoft, Mu is a small language model (SLM) with 330 million parameters, built to run entirely on-device using the system’s neural processing unit (NPU). Unlike large cloud-based models, Mu operates locally, providing instant responses without sending data to external servers. This offers not only faster performance but also enhanced privacy and security for users.

Mu uses a transformer-based encoder-decoder architecture. In this architecture, the encoder processes user input into a fixed-length representation, which the decoder then analyzes to generate appropriate actions or responses. This design allows Mu to interpret complex user commands and respond with remarkable precision.

Optimized for Windows 11 AI Agents

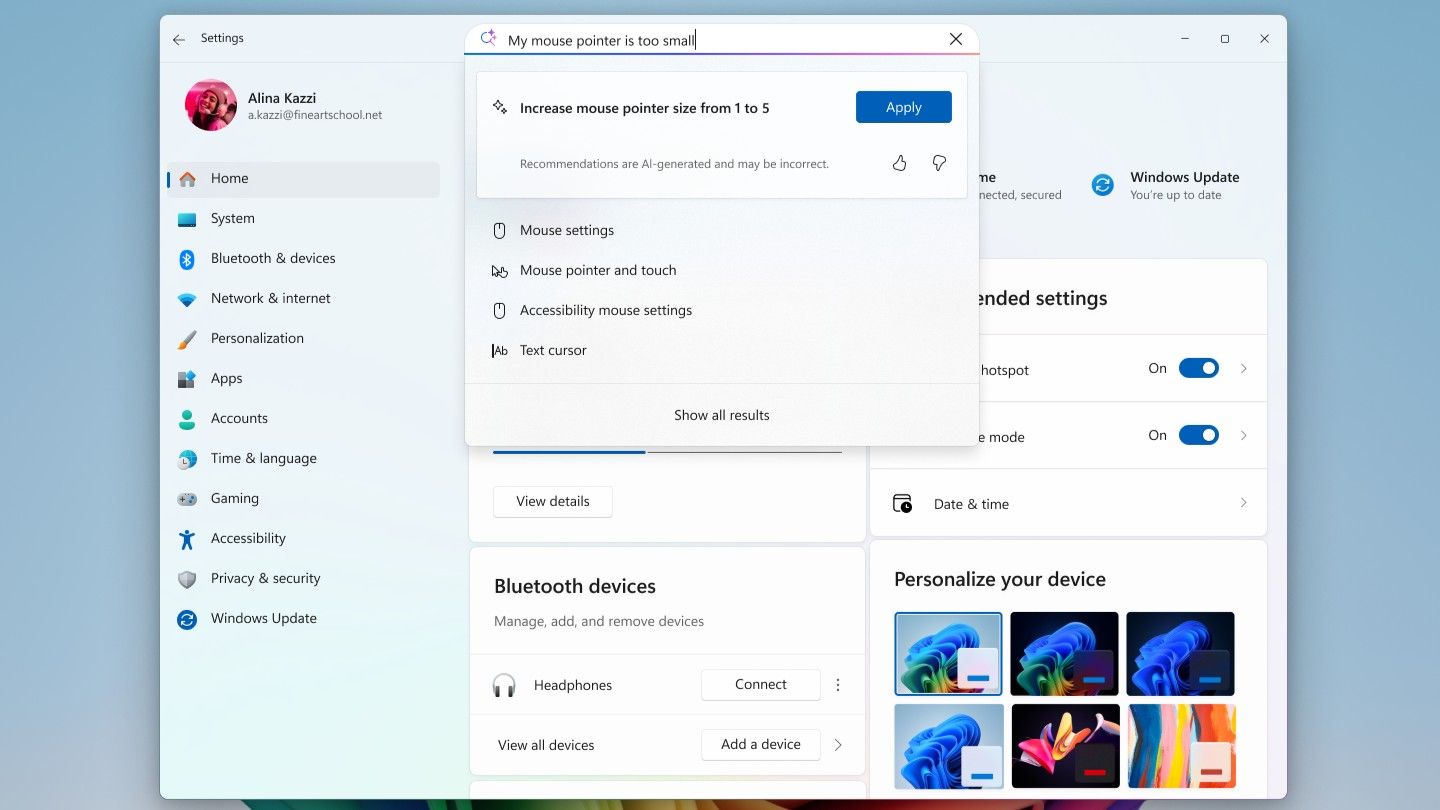

The Mu AI model was specifically optimized to meet the needs of AI agents in Windows Settings. These agents allow users to describe tasks in natural language, and Mu can either navigate to the correct setting or automatically perform the requested action. Microsoft claims Mu responds at more than 100 tokens per second, meeting the demanding user experience requirements of real-time system interaction.

For instance, if a user types, “lower screen brightness at night,” Mu processes the context and directly adjusts the system brightness setting accordingly. In contrast, a simple query like “brightness” may still trigger traditional keyword-based search results, demonstrating the model’s nuanced handling of varying query complexity.

Built from Microsoft’s Phi Models

Mu represents an advanced evolution of Microsoft’s previous Phi models. Distilled from these larger models, Mu maintains high performance while significantly reducing size and resource requirements. Despite being one-tenth the size of the Phi-3.5-mini model, Microsoft claims Mu delivers comparable capabilities.

The training process involved extensive optimization using Azure Machine Learning with powerful A100 GPUs. Techniques such as low-rank adaptation (LoRA), synthetic labeling, and noise injection were employed to fine-tune Mu for the specific demands of Windows Settings.

Training with Massive Data for Precision

To prepare Mu for real-world scenarios, Microsoft scaled its training data significantly. Initially trained on 50 system settings, Mu’s scope was expanded to hundreds of settings, using more than 3.6 million examples. This extensive dataset allowed the model to quickly learn how users typically phrase requests and to handle ambiguous commands effectively.

For instance, the model was trained to distinguish between commands like “increase brightness” (which could refer to internal or external monitors) by focusing on the most commonly used settings first. This area continues to be refined as more users engage with the AI agents.

Why On-Device AI Matters

One of the key innovations with Mu is its complete on-device deployment. Unlike cloud-based models that rely on internet connectivity, Mu operates entirely within Copilot+ compatible PCs. This approach delivers multiple benefits:

- Faster response times: Local processing eliminates cloud latency.

- Increased privacy: User data never leaves the device.

- Energy efficiency: Optimized for NPU-powered processing.

- Reduced bandwidth usage: No continuous data streaming required.

According to Microsoft, this aligns with its broader strategy of developing AI-first experiences in Windows 11 Copilot+, where AI deeply integrates with the OS to improve productivity, personalization, and accessibility.

Challenges and Limitations

Despite its capabilities, Mu is not without limitations. Microsoft acknowledged that the model performs better with more detailed queries, struggling occasionally with very short or vague inputs. To mitigate this, Windows continues to display traditional search-based results when insufficient context is provided.

Another challenge involves multi-functional settings. For example, “increase brightness” could relate to the device’s built-in screen or an external monitor. Mu currently focuses on the most commonly used settings, but Microsoft is actively refining its ability to handle such nuances more flexibly over time.

Future Potential of Mu AI

The successful deployment of Mu in Windows 11 Settings demonstrates the growing viability of small language models (SLMs) for real-time, on-device tasks. As NPUs become standard across more consumer devices, we can expect increasingly sophisticated AI-powered system features that operate locally, privately, and with lightning speed.

Beyond Windows Settings, Mu or its successors could eventually power AI agents across the entire OS, helping users manage files, troubleshoot issues, automate tasks, and even provide personalized recommendations based on user behavior.

Conclusion

With Mu, Microsoft takes a significant step forward in delivering on-device AI that is fast, efficient, and deeply integrated into the Windows ecosystem. As AI agents evolve, users can expect increasingly natural interactions with their PCs, making complex settings and adjustments as simple as describing them in plain language.

Microsoft’s innovations with Mu showcase a new direction for AI where powerful models no longer require cloud dependence, opening up vast possibilities for privacy-first, highly responsive user experiences in the years ahead.

External Reference

Image